Tech

Common Myths About Buying High Quality Backlinks – Debunked!

The SEO currency is the backlinks. They assist the search engines in the authority, visibility of your site, and credibility. However, when it comes to purchasing quality backlinks, everyone is confused and even afraid of the topic. The reason why many marketers fear buying backlinks is the old notions or myths which are no longer relevant in the year 2025.

We shall demystify the most popular legends of purchasing high-quality backlinks- and shatter them with a stick.

Myth 1: Purchasing Backlinks is a sure way to be penalised by Google.

This is the largest myth, at least. Google has been preaching against manipulative link-building methods for years. Therefore, individuals assume that every bought backlink is an expense. But the truth is more nuanced.

Google punishes spammy, low-quality, irrelevant backlinks and not all backlinks that are paid. When you purchase links on link farms, auto-networks, or sketchy directories, then the answer is yes, you are taking a risk of being penalised.

But when you buy high-quality backlinks on worthy and relevant websites where the link position is natural, the position of your site can be of great benefit.

Reality: There is no automatic danger in purchasing backlinks; what is important is the quality and relevance of the links you purchase.

Myth 2: Free Backlinks are not always good.

Others feel that they should just aim for the free backlinks since free is safe. Although organic backlinks are important to earn, it is slow and competitive. Producing a link-worthy piece of content is not a sure bet of getting links unless you do outreach, PR, or partnerships.

By purchasing quality backlinks, you can speed up your SEO development and be able to compete with bigger brands. It is comparable to providing your own website with a head start in a full race.

Reality: Free backlinks are fine, but the bought ones of high quality can work in supporting your strategy and accelerate the outcomes.

Myth 3: More Links = Better Rankings

The other myth is that the more backlinks you purchase, the higher you will rank. Previously, quantity did count. However, in 2025, Google algorithms will focus on quality, rather than quantity.

Low-quality, irrelevant backlinks from ten websites will not help your SEO at all (in fact, they will cause it to be worse). However, the two backlinks in high-authority, niche-specific sites will make the needle shift a lot.

Relevance, authority and placement should be the most important in your link strategy and not volume.

Reality: It is not the number of backlinks that you purchase that matters, but the quality of those links.

Myth 4: It is Unethical to Purchase Backlinks.

Other marketers regard the purchase of backlinks as the act of cheating or violating SEO ethics. The truth is, however, that a lot of marketing requires the money to buy visibility. The sponsored content, paid collaborations, and influencer collaborations belong to the same category.

The disparity is in transparency and quality. When you are purchasing links and you are just trying to boost your rankings by means of spamming sites, then that is unethical and it is dangerous. However, when you are paying to be placed on quality sites that indeed match your niche, it is just good business.

The truth is that lots of industry leaders invest in sponsored posts, collaborations and content placements that involve backlinks- because it works.

Reality: Acquiring good-quality backlinks is not unethical, provided that it is done in a transparent and strategic manner.

Myth 5: Anchor Text Must Always Be Rich with Keywords.

When marketers purchase the backlinks, they tend to demand the exact keywords as anchor texts so as to achieve the maximum effects of SEO. Yet excessive anchor text optimisation might be quite unnatural and raise red flags.

A good backlink profile entails:

- Branded anchors (company name)

- URL-based anchor (www.yoursite.com).

- Generic anchors (learn more, click here).

- Anchor rich in keyword (sparingly)

Reality: Anchor text variation is more important than cramming keywords into each of the bought backlinks.

Myth 6: Purchasing Backlinks = Instant Results.

There are marketers who want instant payback on the purchase of backlinks. Although quality backlinks can be used to speed up SEO, this does not happen instantly. Google crawls, indexes and analyses new backlinks before repositioning.

Usually, it takes a few weeks or a few months, depending on the competition, the quality of the content, and your current SEO background, before you start to see the actual effect of purchased backlinks.

Reality: Quality backlinks may accelerate growth, but will not provide immediate rankings.

Myth 7: High-quality backlinks in 2025 are provided on all high-DA sites.

Domain Authority (DA) or Domain Rating (DR) is often confused with the quality of backlinks. These measures are useful, but not all.

Even the site with a DA of 80 can promote links to irrelevant or spammy material, and it will ruin the value. On the other hand, a DA 40 niche-specific blog that has real traffic could also present a stronger backlink to your brand.

- Buying backlinks, consider:

- Relevance to your niche

- Quality of traffic and interaction.

- Outbound link practice (does the site have any links to dubious material)

Reality: A high DA does not necessarily imply a high quality–context and relevance are important.

Myth 8: Purchase of Backlinks substitutes the content creation.

Other marketers feel that they can afford not to create content at all as long as they purchase enough backlinks. This could not be more so.

Backlinks do not kill your content; they make it more popular. Even the finest backlinks will not keep you on top of the ranking list without valuable, well-optimised content on your site. Google also gives incentives to websites that have good content mixed with good backlinks.

Consider backlinks as gasoline, and content as a car. The fuel can not get you anywhere without the engine.

Reality: You still require quality content to be able to make your backlinks work.

Final Thoughts

The purchase of high-quality backlinks is not the SEO taboo many believe it to be. When done badly, it may hurt your rankings. When done well, it can put your website at the top of search engine results. Together with a good content strategy and organic link building, buying quality backlinks is a strength, not a thing to be afraid of. The competitive world of digital media is nowadays no longer about not having backlinks but rather about having the backlinks in a smart way.

Tech

How SEO Audits Influence Website Growth

In the fast-paced world of digital marketing, a website’s visibility can determine the success or failure of a business. With competition intensifying, regular website evaluations are crucial for identifying areas for improvement and capitalizing on new opportunities. For companies aiming to stay ahead, utilizing resources like a free SEO audit by Vazoola offers a strategic advantage, helping uncover optimization gaps and actionable improvements right from the start.

Regular SEO audits serve as a fundamental tool in maintaining a robust online presence. These audits not only highlight technical issues but also assess content relevance, user experience, and compliance with current search engine guidelines. Addressing these factors is essential for growing organic traffic, enhancing user engagement, and ultimately boosting website revenue.

Understanding SEO Audits

SEO audits are comprehensive reviews of the various elements that affect your site’s search engine rankings. These include an assessment of technical configurations, quality and relevance of content, backlink profiles, and overall site health. The audit process is designed to discover obstacles that impede search visibility and recommend optimizations that can drive measurable results. Regular audits help you maximize your potential in a competitive digital landscape and adapt quickly to the evolving algorithms of popular search engines, such as Google and Bing.

A thorough SEO audit examines everything from metadata and site structure to on-page keyword usage and internal linking practices. This multifaceted approach ensures that no critical aspect of your site is overlooked, providing a holistic perspective for future improvements. Addressing these findings yields tangible benefits, including increased organic visits, improved click-through rates, and enhanced authority within your niche.

Identifying Technical Issues

Identifying Technical Issues

Technical SEO forms the backbone of website performance. Common technical challenges—such as slow loading times, mobile usability issues, broken links, improper redirects, and poor crawlability—are often hidden from regular site oversight. SEO audits utilize advanced diagnostic tools to efficiently uncover these challenges. For example, using resources like Google PageSpeed Insights to analyze load speeds or Google Search Console to monitor crawl errors can directly impact your website’s rankings and indexing status.

Technical health extends beyond immediate fixes; it plays a crucial role in the quality of a visitor’s interaction with your website. Regular identification and correction of technical issues limit the risk of negative user experiences and search engine penalties, ensuring continued visibility and growth.

Enhancing Content Quality

Content remains a major driving force for SEO. Audits help to assess whether your content delivers real value, aligns with user intent, and remains up to date. By pinpointing thin, duplicated, or outdated pages, an SEO audit provides actionable steps to either refine or remove underperforming materials. These improvements uphold the website’s authority, aligning with Google’s Helpful Content Guidelines, which aim to reward publishers who create original and valuable content for their users.

Enhancing your content also involves reevaluating keywords, ensuring information accuracy, and adding relevant media, which collectively make the site more attractive to both visitors and search engines. Regular audits ensure that your site remains a trusted authority in its field, supporting long-term organic growth. For further insights into crafting high-quality content and digital best practices, explore advice from leading industry authorities at Search Engine Journal.

Adapting to Algorithm Changes

Search engine algorithms are constantly evolving, often leaving unprepared sites at risk of ranking drops or penalties. SEO audits enable businesses to stay current with the latest changes, ensuring continued compliance and ongoing relevance. Proactively monitoring for updates—such as Google’s major core updates—helps sites adjust their strategies promptly, mitigating the risk of sudden traffic losses.

Adapting to updates may involve tweaking keyword strategies, adjusting technical settings, or refining specific content sections to meet new ranking factors. The regular audit cycles ensure your website remains competitive, regardless of how unpredictable search algorithms may become.

Improving User Experience

Website user experience is central to both visitor satisfaction and search engine performance. SEO audits rigorously evaluate site navigation, visual layout, content readability, and responsiveness across devices. By resolving UX issues such as confusing menus or clunky mobile interfaces, audits minimize bounce rates and encourage repeat visits.

Search engines are increasingly prioritizing metrics such as dwell time and user engagement when determining rankings. When your website is intuitive and enjoyable to use, both your audience and search algorithms take notice—leading to sustainable improvements in ranking and conversion rates. UX best practices are discussed in-depth by reputable platforms, such as Moz Blog, providing guidance that complements audit-driven improvements.

Monitoring Backlink Profiles

Backlinks remain one of the strongest signals of website authority and trust in the eyes of search engines. An SEO audit meticulously assesses your backlink profile to highlight risky links from disreputable sources, as well as untapped opportunities for high-quality link acquisition. Removing or disavowing toxic links protects your site’s reputation, while focusing effort on building valuable relationships with respected domains enhances your authority and trustworthiness.

With continuous auditing, your backlink strategy evolves, reinforcing the site’s credibility and amplifying its search performance over time.

Conclusion

SEO audits are a vital asset for website growth, driving technical corrections, content enhancements, and enhancing the overall user experience. By uncovering unseen challenges and guiding timely adjustments, they form a roadmap for continuous improvement in a rapidly shifting digital environment. Staying proactive with regular audits ensures your site’s online success, competitiveness, and lasting value in search engine rankings.

Tech

Best Practices for Selecting Enterprise Tower Servers

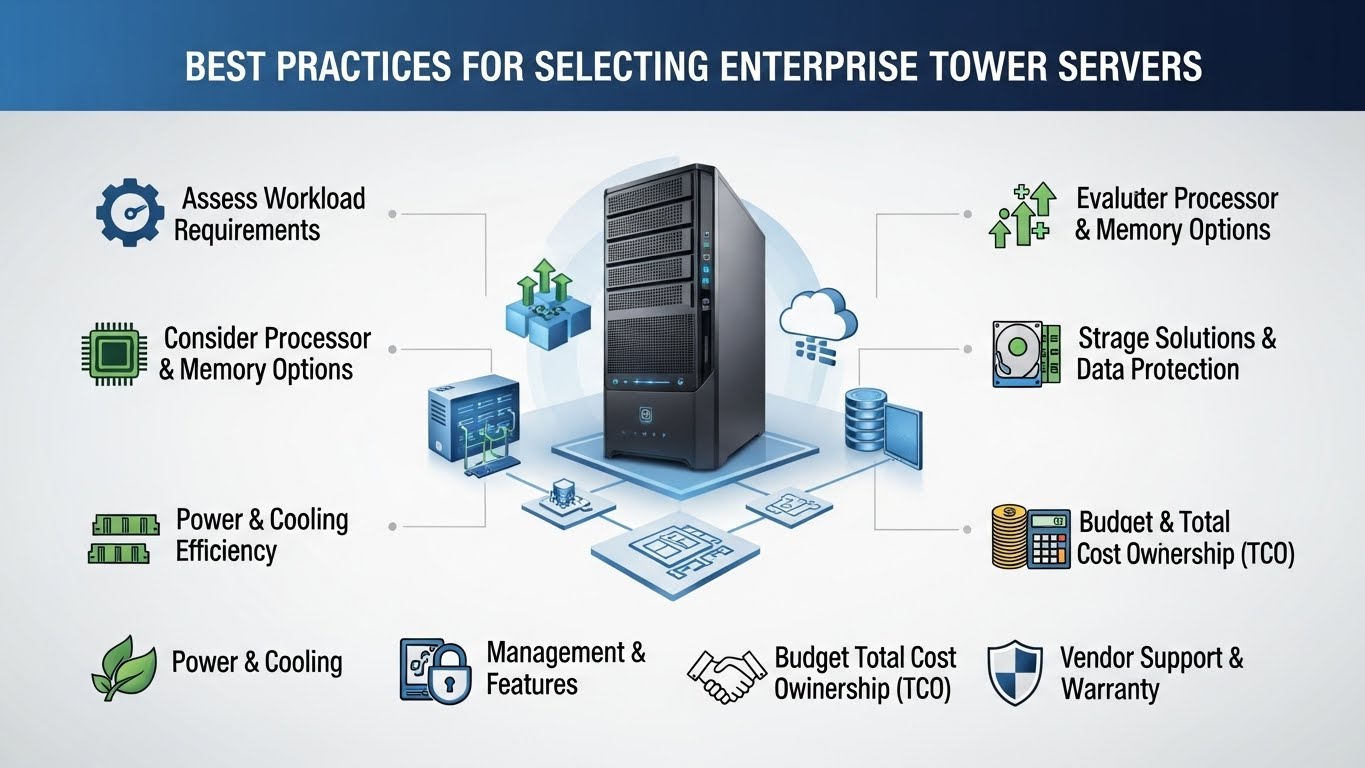

Assessing Business Requirements

Selecting the correct enterprise tower server requires a thorough understanding of your organization’s specific needs, including the number of concurrent users, application workloads, data volume, and uptime requirements. This assessment helps prevent performance issues and high costs. Engaging insights from various departments enables decision-makers to gauge IT needs accurately, avoiding both over-provisioning and underestimation.

For businesses with evolving IT environments, it’s also essential to identify the expected future expansion of software applications, data processing, and backup requirements. This proactive approach not only helps with smoother integration but also prevents frequent hardware overhauls that can disrupt ongoing business operations. Leaders increasingly look to proven hardware, such as Nfina high-performance tower servers, for robust and customizable options tailored to accommodate a wide range of business scenarios, ensuring readiness for both current workflows and future growth.

Collaborating with department heads and IT teams helps identify priority features tailored to business needs, such as security for sensitive data or high performance for design work. Involving stakeholders prevents missed requirements, while assessing business continuity needs, such as disaster recovery and data redundancy, ensures scalable and reliable tower server performance.

Performance and Scalability

Modern enterprise servers must seamlessly integrate high-performance computing with the ability to scale alongside your organization. Selecting servers equipped with multi-core CPUs, ample RAM, and high-speed SSD storage yields substantial productivity gains across various business applications. These hardware components enable rapid data processing, faster application response times, and the ability to handle diverse or resource-intensive workloads efficiently. However, equally vital is scalability—opting for modular servers allows for expansion without the need to replace the entire system, a key advantage as business workloads and storage needs increase or evolve.

As your business grows, unplanned hardware upgrades can be disruptive; therefore, it’s essential to choose a server architecture that allows for additional CPUs, memory, and storage options. This flexibility lets IT teams respond quickly to spikes in demand or unforeseen challenges, keeping your organization agile and competitive. Consider modular chassis designs and hot-swappable components that facilitate easy upgrades with minimal downtime, reducing the impact on business continuity and user experience.

Processor and Memory Considerations

When evaluating server performance, prioritize systems featuring the latest generation processors and sufficient memory to support virtualization, advanced analytics, and complex database workloads. Higher core counts and fast memory bandwidth enable better multitasking, allowing multiple applications or virtual machines to run concurrently without interruption. Upgradable memory and storage options should never be overlooked, as they guarantee ongoing compatibility with new software releases, growing user bases, and unpredictable surges in activity.

Workload Compatibility

Ensure the selected server is validated and tested for all mission-critical business software, including enterprise resource planning (ERP), database management solutions, and virtual desktop infrastructure environments. Matching server architecture with your specific workloads ensures operational efficiency, which is often highlighted in enterprise server reviews by publications such as ITPro. Proper workload compatibility ensures that the server delivers the expected performance outcomes and minimizes the risk of application failures or slowdowns.

Security Features

Security safeguards built at the hardware level can mean the difference between sustained uptime and costly data breaches. Look for essential features such as hardware-based encryption, secure boot capabilities, Trusted Platform Modules (TPMs), and regular and automatic firmware updates. Enhanced protections may also include integrated firewalls and advanced intrusion detection systems, shielding sensitive and mission-critical data from both external hackers and insider threats.

As cyberattacks continue to evolve, preparing your enterprise with multi-layered security—spanning both physical and digital realms—proves invaluable for maintaining business continuity and compliance. Selecting server options that facilitate ongoing compliance with regulations such as GDPR, HIPAA, or PCI-DSS is crucial for organizations operating in highly regulated sectors. Implementing these advanced security features in your network infrastructure reduces liability and demonstrates your commitment to privacy and data protection.

Total Cost of Ownership

The sticker price is only one component of an enterprise server investment. Calculating the total cost of ownership (TCO) provides insight into ongoing expenses, including energy consumption, maintenance contracts, technical support, and potential losses resulting from downtime. For many organizations, a slightly higher initial investment can be offset by lower long-term operational costs, an extended lifespan, or energy-saving features that reduce overhead.

Evaluating warranties, service level agreements, support terms, and energy consumption ratings upfront can result in significant financial savings over the server’s lifetime and ensure maximum value from your investment. Research compiled by TechRadar frequently cites value-driven server solutions that excel in reliability and scalable pricing models for businesses of all sizes. Factoring TCO into decisions encourages long-term thinking and more strategic resource allocation.

Energy Efficiency

Energy-efficient tower servers are critical for organizations seeking both cost savings and a sustainable IT infrastructure. Features such as low-power CPUs, SSD storage, and highly optimized cooling systems help reduce power usage and lower overall expenditures associated with running business technology daily. Many modern tower servers are designed for minimal noise output, supporting open-plan offices or space-limited environments where excess heat or sound can be disruptive.

Supporting the company’s sustainability objectives also means selecting servers built with eco-friendly materials and supported by responsible recycling or take-back programs—responsible choices that enhance corporate social responsibility. Beyond the positive environmental impact, lower power consumption directly translates to lower utility bills and a smaller carbon footprint. By adopting energy-efficient technologies, organizations can also improve their public image and appeal to customers who value environmental stewardship.

Vendor Support and Warranty

Responsive vendor support and comprehensive warranty options are non-negotiable for enterprise server deployments. Reliable support not only expedites troubleshooting when technical issues arise but also minimizes server downtime, preserving business continuity and productivity. It’s wise to examine a vendor’s track record, customer reviews, coverage details, and average response times closely before finalizing any purchasing decision.

Comprehensive warranties—including options such as next-business-day service, on-site repairs, and readily available spare parts—help mitigate the risks associated with hardware failures. Choosing a vendor renowned for customer service can make a clear difference in ongoing server performance and overall satisfaction, reducing anxiety related to unforeseen technical setbacks.

Future-Proofing

IT infrastructure should be agile enough to support your organization’s future ambitions. Select tower servers that offer a robust suite of future-ready features, such as advanced virtualization support, flexible input/output (I/O) expansion slots, and the ability to integrate emerging storage technologies. This forward-thinking approach extends the operational lifespan of your IT investment and reduces the need for disruptive, large-scale replacements as your requirements grow or change.

Keeping server technology closely aligned with your growth strategy enables your business to pivot quickly and capitalize on new market trends, delivering sustained value even as digital transformation accelerates change within your sector. Proactive future-proofing supports rapid responses to emerging opportunities or challenges, enabling your organization to remain resilient and adaptable in an increasingly competitive landscape.

Conclusion

Selecting the optimal enterprise tower server is a nuanced decision that demands alignment with organization-specific business needs, clear performance expectations, security priorities, and cost considerations. Prioritizing future-proof features and robust vendor support ensures your infrastructure remains resilient, scalable, and adaptable, empowering your business for long-term success in a constantly evolving digital landscape. Take the time to engage all departments, analyze future growth projections, and weigh the full scope of ownership costs. These best practices will help ensure your enterprise tower server investment is a strategic and enduring asset for years to come.

Tech

The Ultimate Review of My Pa Resource.com: Is It Worth Your Time?

Introduction to MyPARESource.com

Are you on the hunt for a reliable source of information and resources? Look no further than MyPARESource.com! This website promises to deliver valuable insights, tools, and services tailored to meet your needs. But does it truly live up to the hype? As we dive into this ultimate review, we will explore every aspect of MyPARESource.com—its purpose, features, user experience, pricing plans, and even what customers have to say. Whether you’re considering signing up or just curious about its offerings, get ready for an in-depth look that will help you make an informed decision. Let’s uncover whether myparesource.com is worth your time!

What is the purpose of the website?

MyPARESource.com serves a distinct purpose in the realm of online resources. It is designed to help users find and access valuable information related to various topics, particularly focused on personal and professional development.

The website acts as a hub for curated content that caters to individuals seeking knowledge enhancement. Whether you’re looking for educational materials or practical advice, MyPARESource.com aims to provide relevant insights tailored to your needs.

Moreover, it emphasizes community interaction. Users can engage with others who share similar interests, fostering an environment of shared learning and support. This platform encourages collaboration while delivering quality resources that empower its audience.

With easily accessible tools and guides, MyPARESource.com strives to simplify the journey toward self-improvement and informed decision-making in everyday life.

Features and Services offered by MyPARESource.com

MyPARESource.com offers a range of features tailored to enhance user experience. The platform provides access to a vast library of resources designed for patient advocacy and education. Users can find articles, videos, and downloadable materials that cover various health topics.

One standout service is the personalized consultation feature. This allows users to connect with healthcare professionals who can offer tailored advice based on individual needs.

The website also includes interactive tools such as symptom checkers and health trackers. These tools help users monitor their conditions effectively.

Additionally, MyPARESource.com hosts webinars and online workshops led by experts in the field. This creates opportunities for learning while engaging with others facing similar challenges.

Community forums are another valuable aspect of the site, fostering connections among users who share experiences or seek support from one another.

User Experience and Ease of Navigation

User experience is crucial when it comes to online platforms, and MyPARESource.com does not disappoint. The website greets visitors with a clean layout that makes exploring effortless.

Navigating through various sections feels intuitive. Users can find what they need without unnecessary clicks or complications. Clear menus guide you seamlessly from one feature to the next.

The search function stands out as particularly efficient. It quickly surfaces relevant content, saving users valuable time.

Mobile responsiveness adds another layer of convenience, allowing access on any device without compromising usability. Whether you’re at home or on the go, information is just a tap away.

Visually appealing design elements enhance engagement while maintaining clarity. It’s designed for ease rather than confusion—a welcome aspect in today’s digital landscape.

Pricing Plans and Packages

MyPARESource.com offers a range of pricing plans tailored to fit different needs. Users can choose from various packages, each designed with specific features in mind.

The basic plan provides essential access at an affordable rate, making it ideal for beginners or casual users. For those seeking more advanced tools and resources, the premium options offer extensive capabilities.

Monthly subscriptions are available, allowing flexibility for users who may not want long-term commitments. Discounts are often provided for annual subscriptions, which is a great incentive for serious learners.

Each package generally includes customer support and additional resources that enhance the overall experience on the platform. This tiered pricing approach allows individuals to select what best aligns with their goals and budget constraints without feeling overwhelmed by unnecessary features.

Customer Reviews and Testimonials

Customer reviews and testimonials are crucial when evaluating MyPARESource.com. Users often share their personal experiences, shedding light on the platform’s effectiveness.

Many appreciate the wealth of resources available. They highlight how easy it is to access information that would otherwise take hours to find. This efficiency resonates well with busy professionals seeking quick solutions.

Some users express satisfaction with customer support. Quick responses and helpful guidance make navigating challenges much smoother. Positive interactions can significantly enhance user experience.

However, not all feedback is glowing. A few customers mention frustrations with occasional glitches or slow load times. These issues can impact usability but seem infrequent for most users.

Diverse experiences paint a balanced picture of MyPARESource.com’s offerings and reliability in meeting user needs.

Comparison with Similar Websites

When comparing MyPARESource.com to similar platforms, several aspects stand out. Many competitors focus solely on specific niches, while MyPARESource.com offers a broader range of services.

For instance, websites like Healthline or WebMD provide valuable health information but lack the tailored support options available at MyPARESource.com. Users seeking personalized advice may find this platform more beneficial.

Another competitor is CareDash, which excels in doctor reviews but does not match the community engagement features present on MyPARESource.com. The interactive elements can enhance user experience significantly.

Some sites offer free content with limited access to premium features. In contrast, MyPARESource.com balances affordability and comprehensive resources effectively. This diversity helps it cater to varied user needs without sacrificing quality or accessibility.

Pros and Cons of Using MyPARESource.com

MyPARESource.com offers several advantages that are hard to overlook. For starters, it provides a wealth of resources and information tailored for those seeking assistance in personal development. Users appreciate the intuitive layout, which makes finding relevant content quick and easy.

However, there are some downsides to consider. A few users report occasional glitches or slow loading times on certain pages, which can be frustrating. Additionally, while many features are free, premium content comes with a cost that might not suit everyone’s budget.

The community aspect is another double-edged sword. While engaging with others can foster support and motivation, some feel overwhelmed by the sheer volume of posts and comments.

Weighing these pros and cons helps potential users decide if MyPARESource.com fits their needs perfectly or if they should explore other options available online.

Conclusion: Is It Worth Your Time?

When evaluating MyPARESource.com, it’s essential to consider its various aspects. The website offers a unique platform designed for those seeking peer-reviewed resources and information on various topics. With an intuitive layout, users can easily navigate through the wealth of content available.

The features and services provided cater well to individuals looking for reliable sources. Whether you’re a student or just someone wanting to expand your knowledge base, there’s something here for you. The pricing plans are also competitive, allowing access without breaking the bank.

Customer feedback reveals a mixed bag of experiences. While many appreciate the depth of information offered, some feel there are areas where improvements could be made. Comparatively, MyPARESource.com stands out against similar platforms in certain niches but may lack in others.

Pros include user-friendly design and valuable content; cons involve potential limitations in scope compared to larger competitors. Your experience will largely depend on what you seek from such a resourceful site.

Deciding whether MyPARESource.com is worth your time hinges on personal needs and expectations. If you’re eager for well-organized information at an accessible price point, this platform might just fit the bill perfectly.

-

TOPIC4 months ago

TOPIC4 months agov4holt: Revolutionizing Digital Accessibility

-

TOPIC6 months ago

TOPIC6 months agoMolex 39850-0500: An In-Depth Overview of a Key Connector Component

-

TOPIC4 months ago

TOPIC4 months agoMamuka Chinnavadu: An Exploration of Its Significance and Cultural Impact

-

TOPIC4 months ago

TOPIC4 months agoGessolini: Minimalist Aesthetic Rooted in Texture

-

blog4 months ago

blog4 months agoBlack Sea Body Oil: A New Standard in Natural Skincare

-

TOPIC6 months ago

TOPIC6 months agoDorothy Miles: Deaf Poet Who Shaped Sign Language

-

TOPIC6 months ago

TOPIC6 months agoManguonmienphi: Understanding the Concept and Its Impact

-

TOPIC5 months ago

TOPIC5 months agoArnav Deepaware: A Rising Computer Scientist and Innovator